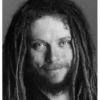

Jaron Lanier

Jaron Lanier

Jaron Zepel Lanieris an American computer philosophy writer, computer scientist, visual artist, and composer of classical music. A pioneer in the field of virtual reality, Lanier and Thomas G. Zimmerman left Atari in 1985 to found VPL Research, Inc., the first company to sell VR goggles and gloves. In the late 1990s, Lanier worked on applications for Internet2, and in the 2000s, he was a visiting scholar at Silicon Graphics and various universities. From 2006 he began to work at...

NationalityAmerican

ProfessionArtist

Date of Birth3 May 1960

CountryUnited States of America

The attribution of intelligence to machines, crowds of fragments, or other nerd deities obscures more than it illuminates. When people are told that a computer is intelligent, they become prone to changing themselves in order to make the computer appear to work better, instead of demanding that the computer be changed to become more useful.

Once you can understand something in a way that you can shove it into a computer, you have cracked its code, transcended any particularity it might have at a given time. It was as if we had become the gods of vision and had effectively created all possible images, for they would merely be reshufflings of the bits in the computers we had before us, completely under our control.

Evolution has never found a way to be any speed but very slow.

There is no difference between machine autonomy and the abdication of human responsibility.

If you want to know what's really going on in a society or ideology, follow the money. If money is flowing to advertising instead of musicians, journalists, and artists, then a society is more concerned with manipulation than truth or beauty.

Making information free is survivable so long as only limited numbers of people are disenfranchised. As much as it pains me to say so, we can survive if we only destroy the middle classes of musicians, journalists, and photographers. What is not survivable is the additional destruction of the middle classes in transportation, manufacturing, energy, office work, education, and health care. And all that destruction will come surely enough if the dominant idea of an information economy isn't improved.

People degrade themselves in order to make machines seem smart all the time.

Separation anxiety is assuaged by constant connection. Young people announce every detail of their lives on services like Twitter not to show off, but to avoid the closed door at bedtime, the empty room, the screaming vacuum of an isolated mind.

If you love a medium made of software, there's a danger that you will become entrapped in someone else's recent careless thoughts. Struggle against that.

If you get deep enough, you get trapped. Stop calling yourself a user. You are being used.

Why do people deserve a penny when they update their Facebook status? Because they'll spend some of it on you.

A fashionable idea in technical circles is that quantity not only turns into quality at some extreme of scale, but also does so according to principles we already understand. Some of my colleagues think a million, or perhaps a billion, fragmentary insults will eventually yield wisdom that surpasses that of any well-thought-out essay, so long as sophisticated secret statistical algorithms recombine the fragments. I disagree. A trope from the early days of computer science comes to mind: garbage in, garbage out.

If you're old enough to have a job and to have a life, you use Facebook exactly as advertised, you look up old friends.

Here’s a current example of the challenge we face: At the height of its power, the photography company Kodak employed more than 140,000 people and was worth $28 billion. They even invented the first digital camera. But today Kodak is bankrupt, and the new face of digital photography has become Instagram. When Instagram was sold to Facebook for a billion dollars in 2012, it employed only 13 people. Where did all those jobs disappear? And what happened to the wealth that all those middle-class jobs created?